반응형

쿠버네티스 로깅시스템은 elasticsearch - fluentd - Kibana 의 앞글자를 따서 EFK로 구축할 수 있다.

fluentd는 로그수집기 역할, elasticsearch는 자료저장, kibana는 자료를 가져와 시각화를 제공한다.

아래 순서대로 apply 하여 구축할 수 있다.

- fluentd-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: fluentd-config

namespace: kube-system

data:

fluent.conf: |

#@include systemd.conf

@include kubernetes.conf

@include conf.d/*.conf

<match **>

@type elasticsearch

@id out_es

@log_level info

include_tag_key true

host "#{ENV['FLUENT_ELASTICSEARCH_HOST']}"

port "#{ENV['FLUENT_ELASTICSEARCH_PORT']}"

path "#{ENV['FLUENT_ELASTICSEARCH_PATH']}"

scheme "#{ENV['FLUENT_ELASTICSEARCH_SCHEME'] || 'http'}"

ssl_verify "#{ENV['FLUENT_ELASTICSEARCH_SSL_VERIFY'] || 'true'}"

ssl_version "#{ENV['FLUENT_ELASTICSEARCH_SSL_VERSION'] || 'TLSv1_2'}"

user "#{ENV['FLUENT_ELASTICSEARCH_USER'] || use_default}"

password "#{ENV['FLUENT_ELASTICSEARCH_PASSWORD'] || use_default}"

reload_connections "#{ENV['FLUENT_ELASTICSEARCH_RELOAD_CONNECTIONS'] || 'false'}"

reconnect_on_error "#{ENV['FLUENT_ELASTICSEARCH_RECONNECT_ON_ERROR'] || 'true'}"

reload_on_failure "#{ENV['FLUENT_ELASTICSEARCH_RELOAD_ON_FAILURE'] || 'true'}"

log_es_400_reason "#{ENV['FLUENT_ELASTICSEARCH_LOG_ES_400_REASON'] || 'false'}"

#logstash_prefix "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_PREFIX'] || 'logstash'}"

#logstash_prefix "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_PREFIX'] || 'logstash-${record['kubernetes']['namespace_name']}'}"

logstash_prefix logstash-${$.kubernetes.namespace_name}

logstash_dateformat "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_DATEFORMAT'] || '%Y.%m.%d'}"

logstash_format "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_FORMAT'] || 'true'}"

index_name "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_INDEX_NAME'] || 'logstash'}"

target_index_key "#{ENV['FLUENT_ELASTICSEARCH_TARGET_INDEX_KEY'] || use_nil}"

type_name "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_TYPE_NAME'] || 'fluentd'}"

include_timestamp "#{ENV['FLUENT_ELASTICSEARCH_INCLUDE_TIMESTAMP'] || 'false'}"

template_name "#{ENV['FLUENT_ELASTICSEARCH_TEMPLATE_NAME'] || use_nil}"

template_file "#{ENV['FLUENT_ELASTICSEARCH_TEMPLATE_FILE'] || use_nil}"

template_overwrite "#{ENV['FLUENT_ELASTICSEARCH_TEMPLATE_OVERWRITE'] || use_default}"

sniffer_class_name "#{ENV['FLUENT_SNIFFER_CLASS_NAME'] || 'Fluent::Plugin::ElasticsearchSimpleSniffer'}"

request_timeout "#{ENV['FLUENT_ELASTICSEARCH_REQUEST_TIMEOUT'] || '5s'}"

suppress_type_name "#{ENV['FLUENT_ELASTICSEARCH_SUPPRESS_TYPE_NAME'] || 'true'}"

enable_ilm "#{ENV['FLUENT_ELASTICSEARCH_ENABLE_ILM'] || 'false'}"

ilm_policy_id "#{ENV['FLUENT_ELASTICSEARCH_ILM_POLICY_ID'] || use_default}"

ilm_policy "#{ENV['FLUENT_ELASTICSEARCH_ILM_POLICY'] || use_default}"

ilm_policy_overwrite "#{ENV['FLUENT_ELASTICSEARCH_ILM_POLICY_OVERWRITE'] || 'false'}"

<buffer tag, $.kubernetes.namespace_name>

@type memory

flush_thread_count "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_FLUSH_THREAD_COUNT'] || '8'}"

flush_interval "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_FLUSH_INTERVAL'] || '5s'}"

chunk_limit_size "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_CHUNK_LIMIT_SIZE'] || '2M'}"

queue_limit_length "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_QUEUE_LIMIT_LENGTH'] || '32'}"

retry_max_interval "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_RETRY_MAX_INTERVAL'] || '30'}"

retry_forever true

</buffer>

</match>

tail_container_parse.conf: |-

<parse>

@type cri

</parse>

kubernetes.conf: |-

#<match fluent.**>

# @type null

#</match>

<label @FLUENT_LOG>

<match fluent.*>

@type stdout

</match>

</label>

<source>

@type tail

@id in_tail_container_logs

path /var/log/containers/*.log

exclude_path ["/var/log/containers/kube-apiserver*"]

pos_file /var/log/fluentd-containers.log.pos

tag kubernetes.*

read_from_head true

format cri

time_format %Y-%m-%dT%H:%M:%S.%N%z

</source>

<source>

@type tail

@id in_tail_minion

path /var/log/salt/minion

pos_file /var/log/fluentd-salt.pos

tag salt

format /^(?<time>[^ ]* [^ ,]*)[^\[]*\[[^\]]*\]\[(?<severity>[^ \]]*) *\] (?<message>.*)$/

time_format %Y-%m-%d %H:%M:%S

</source>

<source>

@type tail

@id in_tail_startupscript

path /var/log/startupscript.log

pos_file /var/log/fluentd-startupscript.log.pos

tag startupscript

format syslog

</source>

<source>

@type tail

@id in_tail_docker

path /var/log/docker.log

pos_file /var/log/fluentd-docker.log.pos

tag docker

format /^time="(?<time>[^)]*)" level=(?<severity>[^ ]*) msg="(?<message>[^"]*)"( err="(?<error>[^"]*)")?( statusCode=($<status_code>\d+))?/

</source>

<source>

@type tail

@id in_tail_etcd

path /var/log/etcd.log

pos_file /var/log/fluentd-etcd.log.pos

tag etcd

format none

</source>

<source>

@type tail

@id in_tail_kubelet

multiline_flush_interval 5s

path /var/log/kubelet.log

pos_file /var/log/fluentd-kubelet.log.pos

tag kubelet

format kubernetes

</source>

<source>

@type tail

@id in_tail_kube_proxy

multiline_flush_interval 5s

path /var/log/kube-proxy.log

pos_file /var/log/fluentd-kube-proxy.log.pos

tag kube-proxy

format kubernetes

</source>

<source>

@type tail

@id in_tail_kube_apiserver

multiline_flush_interval 5s

path /var/log/kube-apiserver.log

pos_file /var/log/fluentd-kube-apiserver.log.pos

tag kube-apiserver

format kubernetes

</source>

<source>

@type tail

@id in_tail_kube_controller_manager

multiline_flush_interval 5s

path /var/log/kube-controller-manager.log

pos_file /var/log/fluentd-kube-controller-manager.log.pos

tag kube-controller-manager

format kubernetes

</source>

<source>

@type tail

@id in_tail_kube_scheduler

multiline_flush_interval 5s

path /var/log/kube-scheduler.log

pos_file /var/log/fluentd-kube-scheduler.log.pos

tag kube-scheduler

format kubernetes

</source>

<source>

@type tail

@id in_tail_rescheduler

multiline_flush_interval 5s

path /var/log/rescheduler.log

pos_file /var/log/fluentd-rescheduler.log.pos

tag rescheduler

format kubernetes

</source>

<source>

@type tail

@id in_tail_glbc

multiline_flush_interval 5s

path /var/log/glbc.log

pos_file /var/log/fluentd-glbc.log.pos

tag glbc

format kubernetes

</source>

<source>

@type tail

@id in_tail_cluster_autoscaler

multiline_flush_interval 5s

path /var/log/cluster-autoscaler.log

pos_file /var/log/fluentd-cluster-autoscaler.log.pos

tag cluster-autoscaler

format kubernetes

</source>

# Example:

# 2017-02-09T00:15:57.992775796Z AUDIT: id="90c73c7c-97d6-4b65-9461-f94606ff825f" ip="104.132.1.72" method="GET" user="kubecfg" as="<self>" asgroups="<lookup>" namespace="default" uri="/api/v1/namespaces/default/pods"

# 2017-02-09T00:15:57.993528822Z AUDIT: id="90c73c7c-97d6-4b65-9461-f94606ff825f" response="200"

<source>

@type tail

@id in_tail_kube_apiserver_audit

multiline_flush_interval 5s

path /var/log/kubernetes/kube-apiserver-audit.log

pos_file /var/log/kube-apiserver-audit.log.pos

tag kube-apiserver-audit

format multiline

format_firstline /^\S+\s+AUDIT:/

# Fields must be explicitly captured by name to be parsed into the record.

# Fields may not always be present, and order may change, so this just looks

# for a list of key="\"quoted\" value" pairs separated by spaces.

# Unknown fields are ignored.

# Note: We can't separate query/response lines as format1/format2 because

# they don't always come one after the other for a given query.

format1 /^(?<time>\S+) AUDIT:(?: (?:id="(?<id>(?:[^"\\]|\\.)*)"|ip="(?<ip>(?:[^"\\]|\\.)*)"|method="(?<method>(?:[^"\\]|\\.)*)"|user="(?<user>(?:[^"\\]|\\.)*)"|groups="(?<groups>(?:[^"\\]|\\.)*)"|as="(?<as>(?:[^"\\]|\\.)*)"|asgroups="(?<asgroups>(?:[^"\\]|\\.)*)"|namespace="(?<namespace>(?:[^"\\]|\\.)*)"|uri="(?<uri>(?:[^"\\]|\\.)*)"|response="(?<response>(?:[^"\\]|\\.)*)"|\w+="(?:[^"\\]|\\.)*"))*/

time_format %FT%T.%L%Z

</source>

<filter kubernetes.**>

@type kubernetes_metadata

@id filter_kube_metadata

kubernetes_url "#{ENV['FLUENT_FILTER_KUBERNETES_URL'] || 'https://' + ENV.fetch('KUBERNETES_SERVICE_HOST') + ':' + ENV.fetch('KUBERNETES_SERVICE_PORT') + '/api'}"

verify_ssl "#{ENV['KUBERNETES_VERIFY_SSL'] || true}"

ca_file "#{ENV['KUBERNETES_CA_FILE']}"

skip_labels "#{ENV['FLUENT_KUBERNETES_METADATA_SKIP_LABELS'] || 'false'}"

skip_container_metadata "#{ENV['FLUENT_KUBERNETES_METADATA_SKIP_CONTAINER_METADATA'] || 'false'}"

skip_master_url "#{ENV['FLUENT_KUBERNETES_METADATA_SKIP_MASTER_URL'] || 'false'}"

skip_namespace_metadata "#{ENV['FLUENT_KUBERNETES_METADATA_SKIP_NAMESPACE_METADATA'] || 'false'}"

watch "#{ENV['FLUENT_KUBERNETES_WATCH'] || 'true'}"

</filter>

- fluentd.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentd

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: fluentd

namespace: kube-system

rules:

- apiGroups:

- ""

resources:

- pods

- namespaces

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd

roleRef:

kind: ClusterRole

name: fluentd

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: fluentd

namespace: kube-system

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd

namespace: kube-system

labels:

k8s-app: fluentd-logging

version: v1

spec:

selector:

matchLabels:

k8s-app: fluentd-logging

version: v1

template:

metadata:

labels:

k8s-app: fluentd-logging

version: v1

spec:

serviceAccount: fluentd

serviceAccountName: fluentd

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: fluentd

image: fluent/fluentd-kubernetes-daemonset:v1-debian-elasticsearch

env:

- name: FLUENT_ELASTICSEARCH_HOST

value: "elasticsearch-in.logging.svc.cluster.local"

- name: FLUENT_ELASTICSEARCH_PORT

value: "9200"

- name: FLUENT_ELASTICSEARCH_SCHEME

value: "http"

# Option to configure elasticsearch plugin with self signed certs

# ================================================================

- name: FLUENT_ELASTICSEARCH_SSL_VERIFY

value: "true"

# Option to configure elasticsearch plugin with tls

# ================================================================

- name: FLUENT_ELASTICSEARCH_SSL_VERSION

value: "TLSv1_2"

# X-Pack Authentication

# =====================

- name: FLUENT_ELASTICSEARCH_USER

value: "elastic"

- name: FLUENT_ELASTICSEARCH_PASSWORD

value: "changeme"

- name: FLUENTD_SYSTEMD_CONF

value: "disable"

- name: K8S_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

- name: config

mountPath: /fluentd/etc

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: config

configMap:

name: fluentd-config

- elasticsearch-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: elasticsearch-pv-claim

namespace: logging

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: nfs-client

- elasticsearch-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: logging

name: elasticsearch-master-config

labels:

app: elasticsearch

role: master

data:

elasticsearch.yml: |-

cluster.name: Cluster

network.host: 0.0.0.0

path.repo: ["/usr/share/elasticsearch/backup"]

- elasticsearch.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: elasticsearch

namespace: logging

labels:

app: elasticsearch

spec:

replicas: 1

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

containers:

- name: elasticsearch

image: elasticsearch:7.8.0

env:

- name: discovery.type

value : "single-node"

- name: ES_JAVA_OPTS

value: -Xms2048m -Xmx2048m

ports:

- containerPort: 9200

- containerPort: 9300

volumeMounts:

- mountPath: /usr/share/elasticsearch/data

name: elasticsearchdata

- name: config

mountPath: /usr/share/elasticsearch/config/elasticsearch.yml

readOnly: true

subPath: elasticsearch.yml

- name : log-backup

mountPath: /usr/share/elasticsearch/backup

volumes:

- name: elasticsearchdata

persistentVolumeClaim:

claimName: elasticsearch-pv-claim

- name: config

configMap:

name: elasticsearch-master-config

- name: log-backup

hostPath:

path: /log-backup

type: Directory

---

apiVersion: v1

kind: Service

metadata:

labels:

app: elasticsearch

name: elasticsearch-in

namespace: logging

spec:

ports:

- name: elasticsearch-rest

port: 9200

protocol: TCP

- name: elasticsearch-nodecom

port: 9300

protocol: TCP

selector:

app: elasticsearch

- kibana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: logging

labels:

app: kibana

spec:

replicas: 1

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: elastic/kibana:7.8.0

env:

- name: SERVER_NAME

value: "kibana.kubenetes.example.com"

- name: ELASTICSEARCH_HOSTS

value: "http://elasticsearch-in:9200"

ports:

- containerPort: 5601

---

apiVersion: v1

kind: Service

metadata:

labels:

app: kibana

name: kibana-svc

namespace: logging

spec:

type: NodePort

ports:

- port: 5601

targetPort: 5601

nodePort: 30005

protocol: TCP

selector:

app: kibanahttp://[마스터노드ip]:30005

로 키바나를 접속할 수 있다.

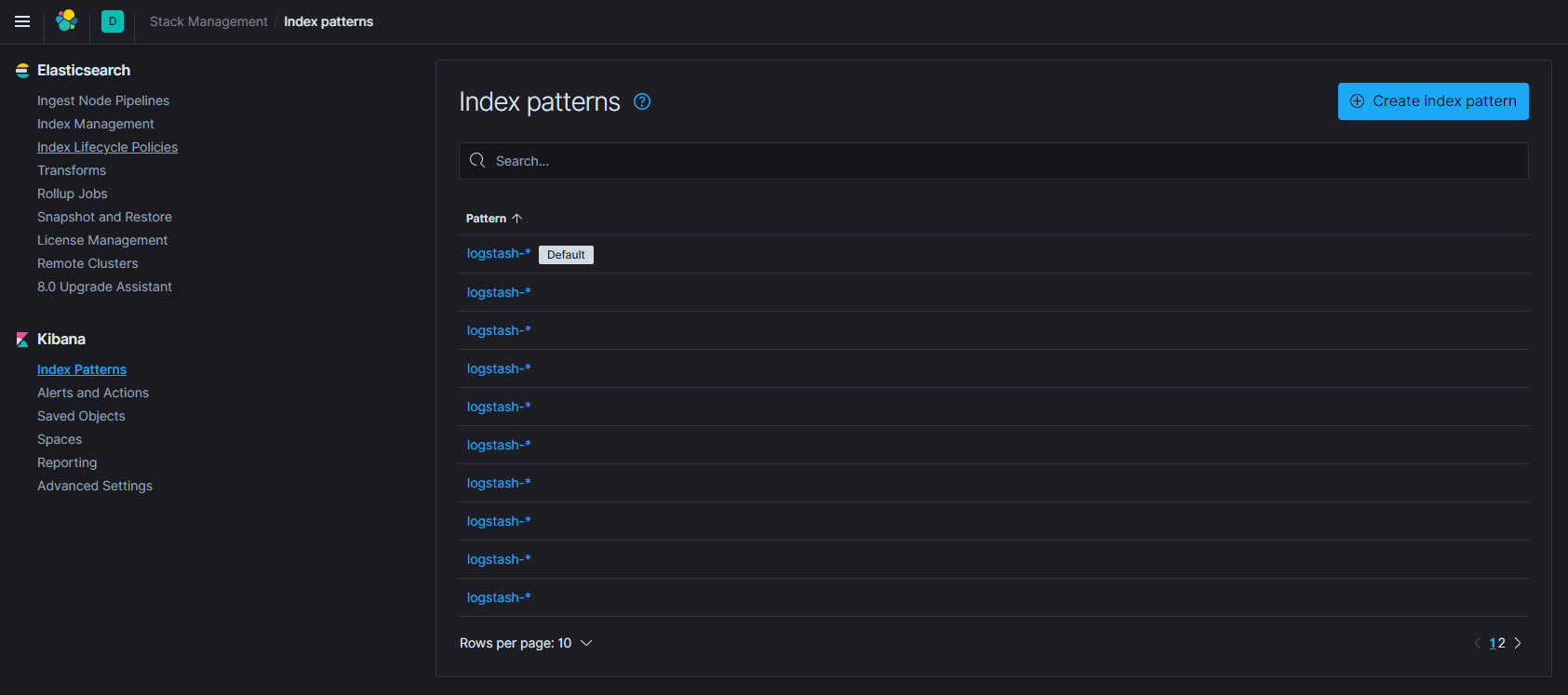

index pattern에서 logstash-* 를 패턴으로 지정한 후 로그를 수집할 수 있다.

반응형

'쿠버네티스' 카테고리의 다른 글

| [Kubernetes] 쿠버네티스 모니터링 시스템 구축 - 2 (0) | 2023.06.22 |

|---|---|

| [Kubernetes] 쿠버네티스 모니터링 시스템 구축 - 1 (0) | 2023.06.22 |

| [Kubernetes] Dynamic-provisioning NFS 구축 (0) | 2023.06.22 |

| [Kubernetes] MetricServer 설치 (0) | 2023.06.20 |

| [Kubernetes] 쿠버네티스 CNI 설치 -flannel (0) | 2023.06.20 |